As AI becomes more embedded in market research, organizations are rightly asking deeper questions about how these technologies are designed, governed and used responsibly. Transparency, human oversight and data governance now matter as much as speed or scale.

Below we share our responses to Esomar’s 20 questions to help buyers of AI-based services — a resource that offers a practical framework for evaluating AI in research. It covers everything from supplier credentials and explainability to ethics, security and data governance.

Our goal is to be transparent about how we use AI today, how we manage risk and oversight, and how we continue to evolve our approach as the technology and regulatory landscape change.

To learn about Rival’s latest AI innovations, please visit rivaltech.com/ai

Click the links below to go to the question you'd like to read about. Prefer to read our answers in a PDF format? That's available here!

A. Company profile

1. What experience and know-how does your company have in providing AI-based solutions for research?

2. Where do you think AI-based services can have a positive impact for research?

3. What practical problems and issues have you encountered in the use and deployment of AI?

B. Explainability and fit for purpose

6. How do the algorithms deployed deliver the desired results?

C. Is the AI capability/service trustworthy, ethical and transparent?

7. What are the processes to verify and validate the output for accuracy, and are they documented?

8. What are the limitations of your AI models and how do you mitigate them?

D. How do you provide human oversight of your AI system?

E. What are the Data Governance protocols?

19. Data sovereignty: Do you restrict what can be done with the data?

20. Ownership of outputs: Are you clear about who owns the output?

Rival Technologies has been actively developing and deploying AI-based capabilities for market research over the past several years, with focused investment during the last three years in applied AI for real research workflows. This work builds on decades of experience across the Rival Group, ensuring AI development is grounded in methodological rigor rather than technology experimentation alone.

Rival’s AI innovation is intentionally structured across two environments. The core Rival platform prioritizes stability, data security and enterprise readiness. In parallel, Rival Labs operates as a dedicated sandbox where researchers, developers and AI specialists test emerging capabilities through rapid experimentation. This separation allows Rival to explore new technologies, learn quickly and iterate without compromising the integrity, compliance posture or reliability of the production platform.

In addition to product development, Rival invests in building client capability. The Innovation Insiders program is a yearlong, hands-on initiative designed to help insight teams move from AI curiosity to practical adoption. The program emphasizes shared learning, structured experimentation and real-world application, reflecting Rival’s belief that responsible AI adoption requires education, context and human judgment alongside technology.

AI-based services can have a positive impact when they are applied to clearly defined research problems and integrated thoughtfully into existing workflows. In Rival’s experience, AI delivers value by significantly enhancing research operations through time savings, quality improvements and scale. By taking on repetitive, manual and operational tasks that can be tedious for researchers, AI reduces friction in the research process and frees teams to focus on higher-value work such as analysis, interpretation and storytelling. AI also increases the reach and influence of insights and enables new approaches that were previously difficult or impractical to deliver at scale.

At an operational level, AI helps address long-standing inefficiencies such as manual data processing, slow analysis cycles and the growing burden of unstructured data. These applications improve speed and consistency while reducing researcher fatigue.

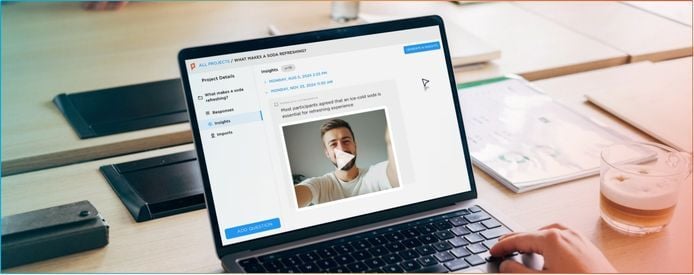

AI also enhances the effectiveness of insights by helping teams communicate findings more clearly and at greater scale. Features such as AI-assisted summaries, insight videos and interactive outputs help insights reach stakeholders faster and in formats that support decision-making.

Finally, AI enables new possibilities in research design and analysis, particularly around unstructured data and conversational engagement. Agentic systems, in particular, create opportunities to orchestrate multi-step workflows and connect tools in ways that support deeper, more continuous insight generation.

In practice, generative AI has worked well for tasks related to operational efficiency and the analysis of qualitative and unstructured data at scale, reducing processing time from days or weeks to minutes. These applications align closely with current model strengths and can be deployed with clear human oversight.

Well-known challenges remain surrounding generative models as a whole, which are probabilistic rather than deterministic, meaning outputs can vary and occasionally miss context or intent. Hallucinations, model drift and rapid model updates require continuous evaluation and testing. As a result, building AI into enterprise-grade research platforms requires governance, validation and discipline. These challenges are why Rival has intentionally built its AI-driven workflows with a human-in-the-loop posture, ensuring quality assurance, contextual judgment and accountability remain part of every step where AI is applied.

Security, privacy and compliance also shape deployment decisions. Rival rigorously tests and assesses new models, and their impact on data protection and platform integrity, as they emerge. As a result, AI features often take longer to move from experimentation to formal release than other platform features, reflecting the additional testing, validation and review required for enterprise use. Rival Labs exists to absorb this experimentation, allowing the core platform to remain stable and trusted.

Another practical challenge is that risk tolerance varies from client to client. Some organizations restrict AI use entirely, while others want to adopt aggressively. Rival addresses this by making AI opt-in by design, allowing clients to control adoption pace and scope. This approach also requires additional operational and legal rigor, including clearer documentation and coverage within statements of work, to ensure all parties understand how AI is being used and the associated considerations.

AI within Rival’s platform is designed to assist researchers with specific tasks rather than replace human judgment. Each AI capability supports a defined job in the research process, such as analyzing open-ended responses, generating follow-up questions, summarizing findings or packaging insights for stakeholders.

For example, within the platform, AI identifies themes by analyzing large volumes of qualitative data, including text and video transcripts. These themes are accompanied by supporting quotes and video clips, along with indicators that help researchers assess confidence and quality. AI can also assist during survey design by generating or refining questions that researchers can review and edit.

AI-generated outputs are always visible and clearly labeled. Within the platform, insights produced by AI are marked as “AI Insights,” and survey elements generated by AI are identified and editable. AI features are opt-in by design, and certain capabilities require an explicit request for access. This additional level of control reflects the fact that some organizations maintain strict internal AI policies and ensures AI is only used intentionally and with appropriate oversight.

For key AI features, Rival also maintains internal documentation that explains how the capability works, how AI is used, and how security and privacy are handled, supporting transparency for researchers and internal stakeholders.

Rival does not build proprietary foundation AI models. Instead, it integrates established large language models through enterprise-ready APIs (with strict security controls already in place) and selects models based on the specific task being performed.

Different models are used for different jobs. Agent-based capabilities that involve reasoning and multi-step decision-making use different models than workflow-based features such as summarization or tone refinement. Rival’s primary production model is provided by OpenAI, and the team also evaluates and works with other providers, including Google and Anthropic, as part of ongoing development and testing.

Rival’s internal development focuses on how these models are orchestrated, governed and embedded into research workflows in ways that support transparency, flexibility and human oversight.

AI features within Rival’s platform are designed around specific tasks and delivered through structured prompts, evaluation logic and human review. Rather than training proprietary models on client data, Rival relies on foundation models and applies them through carefully designed workflows.

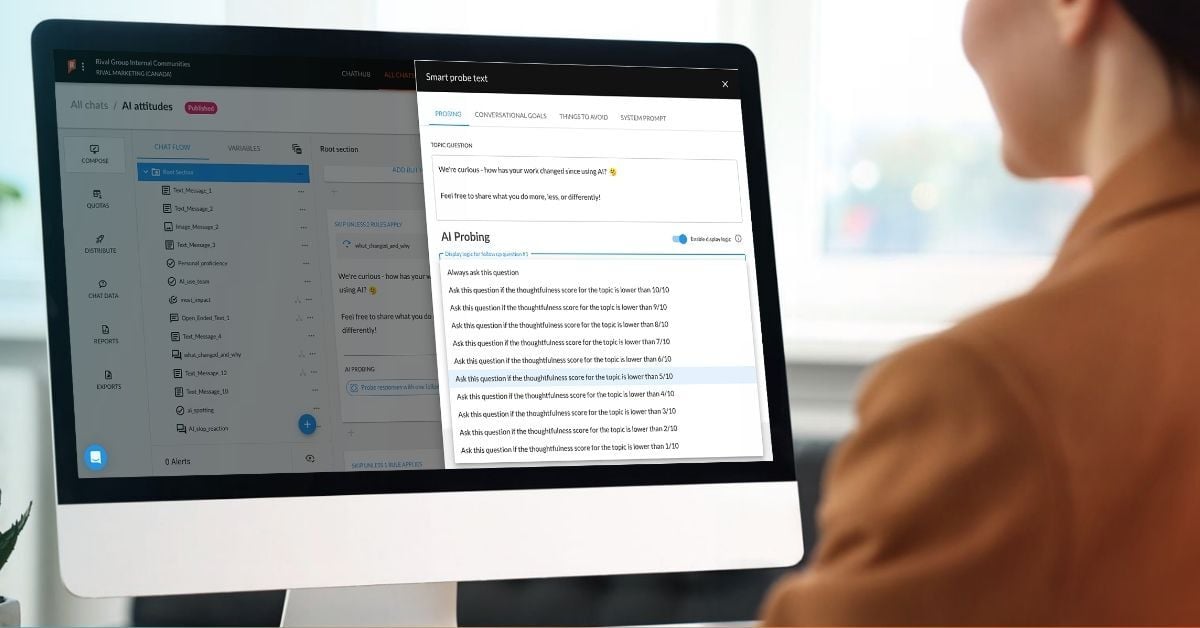

For unstructured data, Rival’s approach connects capabilities such as AI Smart Probe, Thoughtfulness Scoring, AI Summarization and Insight Reels into orchestrated systems, including its Unstructured Data Agent. These systems surface patterns, generate drafts and package insights while preserving clear links back to the underlying responses.

Human oversight is embedded throughout. Researchers are able to review, refine and validate AI-generated outputs before they are used in analysis or reporting, ensuring results remain fit for purpose and grounded in context.

Rival designs AI capabilities to augment research workflows, not replace or completely automate them. As a result, validation and review are built into how AI outputs are generated and used. AI-generated insights are treated as drafts or inputs that require researcher inspection, interpretation and approval before they are incorporated into analysis or reporting.

From a technical standpoint, Rival evaluates AI outputs using structured testing and evaluation pipelines. These processes are designed to monitor for issues such as hallucinations, output drift and inconsistencies that can arise from probabilistic models. When models are updated or changed, outputs are evaluated before changes are introduced into production workflows.

From a methodological standpoint, Rival emphasizes human-in-the-loop practices. Features such as confidence indicators, traceability back to source responses and visible reasoning steps help researchers assess whether outputs are reliable and appropriate for the research objective. Low-confidence or ambiguous outputs are designed to draw attention rather than be accepted automatically.

Rival continues to invest in evaluation frameworks and best practices for enterprise AI readiness, recognizing that validation is an ongoing process rather than a one-time check.

Rival’s AI capabilities rely on established foundation models, which share common limitations as outlined above. Rival mitigates these limitations through design and process. AI features are deployed with human oversight, ensuring that researchers review and guide outputs rather than relying on them autonomously. AI-generated insights are labeled clearly, and users retain the ability to edit, reject or replace them.

Rival also evaluates model updates carefully. New or upgraded models are tested and assessed before being introduced externally to determine whether changes materially improve outcomes and align with research quality standards.

By treating AI as a support tool rather than a source of truth, Rival reduces the risk that model limitations compromise research integrity.

Rival designs its AI capabilities with a duty of care to both research participants and researchers. For participants, AI-driven features are built to preserve respectful, natural and non-intrusive experiences. For example, its AI Smart Probe feature is designed to ask relevant follow-up questions only when additional depth is needed, avoiding excessive or inappropriate prompts.

For researchers, Rival emphasizes augmentation over automation. AI is intended to reduce cognitive load, not introduce new sources of risk or confusion. Interfaces are designed to make it easy to inspect outputs, understand how they were generated and intervene when judgment or context is required.

More broadly, Rival’s approach reflects the view that research decisions involve ethical nuance, empathy and contextual understanding that AI alone cannot provide. By keeping humans in control and making AI use explicit, Rival seeks to reduce the risk of unintended harm, misinterpretation or misuse.

Rival is explicit about when and where AI is used within its platform. AI-generated insights are clearly labeled as “AI Insights,” allowing researchers and stakeholders to distinguish between human-generated and AI-assisted outputs at a glance.

During survey design, questions or elements generated with AI are identified as such, and researchers can review, modify or discard them before launch. AI features are opt-in by design, meaning they are turned off by default unless the user chooses to enable them.

Within certain features, such as AI Smart Probe, the prompts that guide the AI are visible to researchers and certain elements are customizable (such as things to focus on and/or avoid) by end-users to ensure human oversight. This allows users to understand how the system is operating and reinforces transparency around AI behavior rather than treating it as a black box.

Rival’s AI approach is grounded in human oversight, transparency and responsible use rather than autonomous decision-making. Ethical principles are operationalized through product design choices, including opt-in controls, visible AI labeling and workflows that require human review before outputs are finalized.

Human judgment governs AI behavior through prompt design, evaluation criteria and review checkpoints. Researchers validate themes, assess tone and apply methodological and ethical judgment before insights are used for decision-making.

Rather than relying on AI to enforce ethics independently, Rival ensures ethical principles are embedded through governance, design and researcher involvement at every stage of the workflow.

Human oversight is integrated throughout Rival’s AI development and deployment processes. AI features are designed to support researchers in discrete tasks while preserving human control over interpretation, analysis and reporting.

Rival Labs plays a key role in responsible innovation by providing a dedicated environment for experimentation. New AI capabilities are tested, refined and evaluated in this sandbox before being considered for broader release. This allows Rival to explore emerging technologies without exposing clients or participants to unproven approaches.

In production, human-in-the-loop practices ensure researchers remain accountable for decisions, quality and ethical considerations. AI outputs are reviewed, guided and refined by humans, and no AI capability operates autonomously in a way that removes researcher responsibility.

This approach allows Rival to innovate while maintaining alignment with ethical standards, regulatory expectations and research best practices. The company’s internal AI Acceptable Use Policy provides ethical guidelines and obligations for all employees.

Rival does not train proprietary AI models and does not use client data to train foundation models. As a result, this question does not apply in the context of managing or assessing AI training data.

AI features are applied to client-owned research data within defined workflows, with researchers responsible for reviewing and validating outputs before use.

Rival does not train AI models and therefore does not manage or document AI training data lineage.

Input data processed by AI features originates from client-managed research conducted on the Rival platform. The use and handling of that data are governed by client agreements and existing platform data governance controls.

Rival’s privacy notice is available at: https://www.rivaltech.com/legal/privacy

Rival complies with applicable data protection laws and follows established industry best practices to protect the privacy of research participants. This includes adherence to GDPR and enterprise-grade security and governance controls.

Participant consent and data handling practices are embedded into research workflows and managed via sub-processor governance and contractual controls, regardless of whether AI-assisted features are used.

Rival’s AI capabilities are built using secure APIs provided by established AI model providers, including OpenAI and other large language model vendors. These providers are selected through due diligence and are reviewed on a regular basis, or whenever the scope of services changes, to ensure their security posture remains appropriate.

Within the Rival platform, AI features operate under the same enterprise security controls as all other functionality and are overseen by a dedicated internal security team. Rival maintains SOC 2 compliance, which governs access controls, data handling, continuous logging and monitoring, incident response, disaster recovery and operational resilience across the platform. Rival’s information security practices are also ISO/IEC 27001:2002 certified, supporting a structured, risk-based approach to protecting information assets and improving controls over time.

AI capabilities are deployed in a controlled manner, and new models or updates are evaluated before being introduced into production environments, ensuring resilience is addressed at both the vendor and platform levels.

Yes. Data ownership, intellectual property rights and usage permissions are defined and communicated through contractual agreements, including statements of work and service agreements.

Clients retain ownership of their research data, subject to the terms of the engagement.

Yes. Rival restricts how data can be used, processed and shared based on client requirements, contractual obligations and applicable privacy and data protection laws. Data handling practices, including where data resides and how it may be processed, are defined through client agreements, statements of work and platform controls.

These restrictions ensure that client data is used only for approved purposes and handled in accordance with legal, privacy and data sovereignty requirements. Operational security controls, including those supported by Rival’s SOC 2 compliance, help enforce these requirements.

Ownership of research outputs is governed through contractual agreements with clients.

Clients own their study deliverables and outputs generated for them from their inputs. Rival does not claim ownership of client inputs or outputs. Rival retains ownership of its platform, models, software, and know-how.

Rival’s approach to AI innovation has always been about augmentation over automation. It’s about elevating the creativity of researchers rather than replacing it.

Our main goal in answering the questions outlined by Esomar is to provide transparency, clarity and confidence to those who are considering Rival’s suite of AI tools. Things in the AI world are always changing, and we know we can’t possibly cover everything in this guide. For the latest news about Rival’s AI innovations, please visit rivaltech.com/ai.

No Comments Yet

Let us know what you think